spark covid

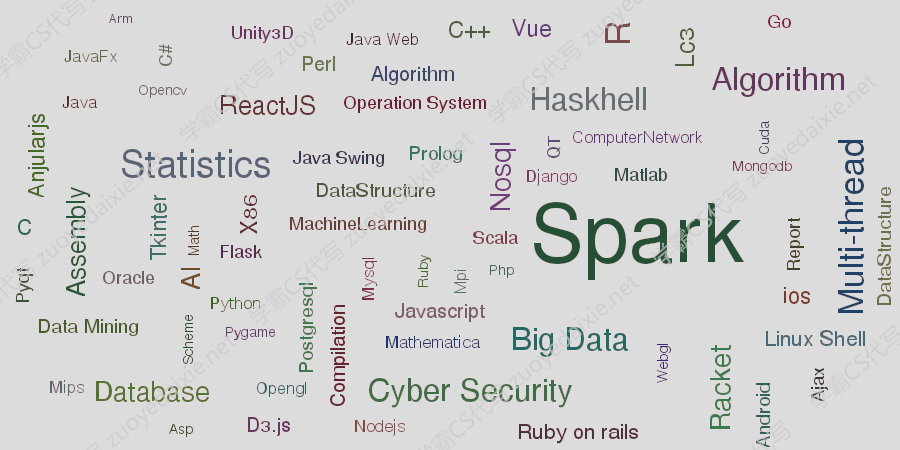

代做spark | oop | 代做大数据 | 代写sql – 这个题目属于一个IT的代写任务, 涵盖了spark/oop/IT/sql等方面

In [0]:

!pip install kaggle !apt-get install openjdk-8-jdk-headless -qq > /dev/null import os os.environ[“JAVA_HOME”] = “/usr/lib/jvm/java-8-openjdk-amd64”

#install spark !wget -q https://downloads.apache.org/spark//spark-2.4.5/spark-2.4.5-bin-had oop 2.7.tgz !tar xf spark-2.4.5-bin-hadoop2.7.tgz >/dev/null

#Provides findspark.init() to make py spark importable as a regular library. os.environ[“SPARK_HOME”] = “spark-2.4.5-bin-hadoop2.7” !pip install -q findspark import findspark findspark.init()

_#The entry point to using Spark sql is an object called SparkSession. IT initiat es a Spark Application which all the code for that Session will run on

to larn more see https://blog.knoldus.com/spark-why-should-we-use-sparksessio

n/_ from pyspark.sql import SparkSession spark = SparkSession.builder .master(“local[*]”) .appName(“Learning_Spark”) .getOrCreate()

Setup Kaggle Access

To use the Kaggle API, sign up for a Kaggle account at https://www.kaggle.com (https://www.kaggle.com).

Then go to the ‘Account’ tab of your user profile – https://www.kaggle.com/

(https://www.kaggle.com/)/account and select ‘Create API Token’. This will trigger the download of

kaggle.json, a file containing your API credentials. Open this file in an editor and then enter the name and

detail in form below

In [0]:

from getpass import getpass username = getpass(‘Enter username here: ‘) key = getpass(‘Enter api key here: ‘)

In [0]:

#set up kaggle configuration api_token = {“username”:username,”key”:key} !mkdir -p /root/.kaggle import json import zipfile import os with open(‘/root/.kaggle/kaggle.json’, ‘w’) as file: json.dump(api_token, file) !chmod 600 /root/.kaggle/kaggle.json

In [0]:

#download the kaggle data. #note to be able to download this data – you might have to verify your account w ith kaggle and visit the competition page at https://www.kaggle.com/c/covid19-gl obal-forecasting-week-2/data first. #learn more about this competition first — https://www.kaggle.com/c/covid19-glo bal-forecasting-week-2/data !kaggle competitions download -c covid19-global-forecasting-week-

In [0]:

#the command above should have downloaded three files — we are interested in te st.csv and train.csv

Task 1 : Open the file, print the schema and count the

number of lines of test and train files. Print the number

of cases in India [20 points]

use spark.read.csv

use the count function

use group by and then filter to check for names by a country

In [0]:

# write code to open the file and count it – 10 points

In [0]:

# write code to group by and filter – 10 points

Task 2 – Get Data per Month on Specific Country [

points]

In [0]:

#thcode for task 2 comes here #write a map and reduce function with spark to count all cases in Fatalities in Australia in January, February and March. Then plot the trend

Task 3: Implement the Power of Elaspsed Time Model

in Spark [40 points]

number of cases C x (time elapsed since first infection)^ where C is a constant that depends on the size

of the region (country), and ranges from around 2 to 9, depending strongly on latitude (affected by

temperature).

See the existing work from https://www.kaggle.com/robertmarsland/covid-19-spreads-as-power-of-

elapsed-time (https://www.kaggle.com/robertmarsland/covid-19-spreads-as-power-of-elapsed-time) to

start.

In [0]:

#the code for task 3 comes here.