Lab 12: Cross Validation

代做homework | 作业IT | lab代写 – 这是一个IT面向对象设计的practice, 考察IT的理解, 涉及了IT等代写方面, 该题目是值得借鉴的lab代写的题目

In this lab, we will get more experience with features for linear models. We will f IT several models to the data with different combinations of features. Using cross validation, we will compare them based on their out-of- sample accuracy. Throughout we will work with the scikit-learn package

1. Fitting a model to data with linear regression

2. Splitting into training set and testing set

3. Dividing the training set into folds for cross validation

We will study a dataset about housing prices in Massachusetts. By completing lab 12 you will have a head start on homework 5 about housing prices in Iowa.

Collaboration Policy

Data science is a collaborative activity. While you may talk with others about the labs, we ask that you write your solutions individually. If you do discuss the assignments with others, please include their names at the top of this notebook.

list names here

In [ ]:

# import packages

import pandas as pd import numpy as np

import seaborn as sns import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split from sklearn.linear_model import LinearRegression from sklearn.model_selection import KFold

# set configuration

np.random.seed( 47 )

pd.options.display.max_rows = 20 pd.options.display.max_columns = 15

% matplotlib inline plt.rcParams[‘figure.figsize’] = ( 12 , 9 ) plt.rcParams[‘font.size’] = 16 plt.rcParams[‘figure.dpi’] = 150

# import additional packages for grading

import sys , os from IPython.display import Image

In [ ]:

# TEST

assert ‘pandas’ in sys.modules and “pd” in locals() assert ‘numpy’ in sys.modules and “np” in locals() assert ‘matplotlib’ in sys.modules and “plt” in locals() assert ‘seaborn’ in sys.modules and “sns” in locals()

assert np.random.get_state()[ 1 ][ 0 ] == 47

Introduction

For this lab, we will use a dataset to predict the house prices in Boston. You can access the data in boston_data.csv.

The data contains these features:

- CRIM per capita crime rate by town

- ZN proportion of residential land zoned for lots over 25,000 sq.ft.

- INDUS proportion of non-retail business acres per town

- CHAS Charles River dummy variable (= 1 if tract bounds river; 0 otherwise)

- NOX nitric oxides concentration (parts per 10 million)

- RM average number of rooms per dwelling

- AGE proportion of owner-occupied units built prior to 1940

- DIS weighted distances to five Boston employment centres

- RAD index of accessibility to radial highways

- TAX full-value property-tax rate per 10,000 USD

- PTRATIO pupil-teacher ratio by town

- LSTAT % lower status of the population

- MEDV Median value of owner-occupied homes in $1000’s

In [ ]:

path = ” {home} /shared/ {file} “.format(home = os.environ[“HOME”], file = “boston_d ata.csv”) boston = pd.read_csv(path)

boston

We want to predict the price of houses MEDV from the 12 features of the house. So the dependent variable is the housing price and the independent variables consist of CRIM through LSTAT. We want to explain the response in housing price to the features like crime rate of neighborhood and number of rooms in the building.

Question 1 : Splitting between Training Set and Testing Set

Before we can fit a model to the data, we want to hold divide the table into two pieces. The training set will consist of 90% of the rows. We will fit the model to the training set. The testing set will consist of 10% of the rows. We will evaluate the model on the testing set.

In [ ]:

X = boston.drop(columns=’MEDV’) Y = boston[‘MEDV’]

RANDOM_STATE = 47 # use this random state for tests to work

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size = 0.10, rand om_state = RANDOM_STATE)

Remember that we sample at random from a population to avoid bias in the study. Similarly we want to split the table at random to avoid bias in the predicitions.

However, we want to have reproducible findings meaning anyone can repeat the study. Since we need to have random numbers in the split between training set and testing set, we use a random seed (https://en.wikipedia.org/wiki/Pseudorandom_number_generator). Think of a random seed as the ignition of a random number generator.

Here we set the random seed to by 47. While we randomly split the table, we could call the function train_test_split (http://scikit- learn.org/stable/modules/generated/sklearn.model_selection.train_test_split.html) to get the same training set and testing set

Note that we have four sets corresponding to variables

X_train

Y_train

X_test

Y_test

How many rows and columns do we have in these sets?

In [ ]:

number_rows_X_train, number_columns_X_train = …, … number_entries_Y_train = …

number_rows_X_test, number_columns_X_test = …, … number_entries_Y_test = …

# YOUR CODE HERE raise NotImplementedError ()

In [ ]:

# TEST

assert number_rows_X_train, number_columns_X_train == ( 455 , 12 ) assert number_entries_Y_test == ( 51 ,)

Question 2 : Linear Regression

Before we investigate different combinations of features, we should fit a linear model to the training set to describe the relationship between the housing price and the housing features.

We’ve imported LinearRegression from scikit-learn. We use the fit function on X_train and Y train to fit the model to the data.

In [ ]:

# YOUR CODE HERE raise NotImplementedError ()

Just like we use fit to fit the model to the data, we use predict to predict the response variable. Use predict to complete the calculation of the residuals.

In [ ]:

Y_pred = …

# YOUR CODE HERE raise NotImplementedError ()

residuals = Y_pred – Y_test

Having generated an array of residuals, we can compare predicted values and observed values on the testing set. We create a scatter-plot showing observed values and residuals.

In [ ]:

# YOUR CODE HERE raise NotImplementedError ()

True or False: The scatter-plot has outliers?

In [ ]:

q2_answer = …

# YOUR CODE HERE raise NotImplementedError ()

In [ ]:

# TEST

assert q2_answer in [ True , False ]

Question 3 : Mean Square Error

We find that many of the residuals differ from 0. Remember that we can use the mean square error in linear regression to measure the difference between observed value and predicted value:

Fill out the function below and compute the MSE. The function should work for predictions on both the training data X_train and the test set X_test. Please avoid loops. Instead take advantag of numpy.

MSE =

1

n i = 1

n

( yi y ^ i )

2

In [ ]:

def mse(actual_y, predicted_y): “”” Input: predicted_y: an array of the prediction from the model actual_y: an array of the observed values

Output:

The mean square error between the prediction and the observation

"""

# YOUR CODE HERE

raise NotImplementedError ()

Compute the mean square error on the testing set and the training set.

In [ ]:

train_error = … test_error = …

# YOUR CODE HERE raise NotImplementedError ()

In [ ]:

# TEST

assert np.isclose(test_error, 33.06, atol=0.01, rtol=0.01)

True or False: The mean square error on the testing set is higher than the mean square error on the training set?

In [ ]:

q3_answer = …

# YOUR CODE HERE raise NotImplementedError ()

In [ ]:

# TEST

assert q2_answer in [ True , False ]

Question 4 : Cross Validation

Let’s try building a simpler linear model with fewer features. While this may increase our training error, it may also decrease our test error and help prevent testing errors.

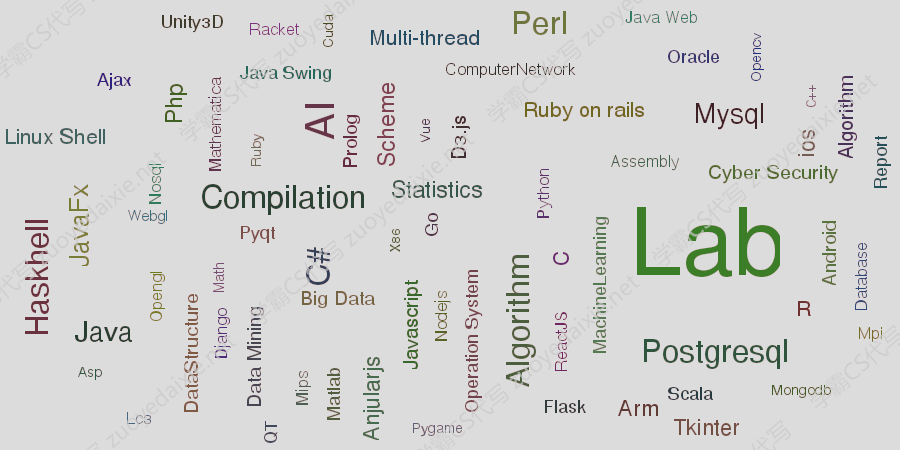

In the next section, we’ll use -fold cross-validation to select the best subset of features for our model. Recall the approach.

k

In [ ]:

Image(“cv.png”)

We will use the scikit-learn implementation of k -fold cross validation.

In [ ]:

number_folds = 4 kf = KFold(n_splits=number_folds)

validation_errors = [] for train_indices, validation_indices in kf.split(X_train): split_X_train = X_train.iloc[train_indices, :] split_X_validation = X_train.iloc[validation_indices, :]

split_Y_train = Y_train.iloc[train_indices]

split_Y_validation = Y_train.iloc[validation_indices]

linear_model = LinearRegression()

linear_model.fit(split_X_train, split_Y_train)

Y_prediction = linear_model.predict(split_X_validation)

error = mse(split_Y_validation, Y_prediction)

validation_errors.append(error)

Here we use 4 folds. The scikit-learn function KFold generates 4 pairs of indices. Each pair consists of the indices for the testing set and the validation set. We use the data for the testing set to fit the model. After we make a prediction on the validation set, we can compare observed values and predicted values with mse from Question 3. We have obtained 4 numbers.

In [ ]:

print(validation_errors)

Taking the average, we obtain the average mean square error across the 4 folds. Using the code above, write a function to compute the average mean square error for a model across folds of a training set.

1. Use the KFold.split (http://scikit-

learn.org/stable/modules/generated/sklearn.model_selection.KFold.html) function to get folds from the

training data. Note that split returns the indices of the data for that fold.

2. For each fold, select out the rows and columns based on the indices.

3. Compute the MSE on the validation split.

4. Return the average across all folds.

In [ ]:

def compute_CV_error(model, X_train, Y_train, number_folds = 4 ): ”’ Split the training data into 4 subsets. For each subset, fit a model holding out that subset compute the RMSE on that subset (the validation set) You should be fitting number_folds models total. Return the average MSE of these 4 folds.

Args:

model: an sklearn model with fit and predict functions

X_train (data_frame): Training data

Y_train (data_frame): Label

Return:

the average validation MSE for the 4 splits.

'''

kf = KFold(n_splits=number_folds)

validation_errors = []

for train_idx, valid_idx in kf.split(X_train):

# split the data

split_X_train, split_X_valid = ..., ...

split_Y_train, split_Y_valid = ..., ...

# Fit the model on the training split

...

# Compute the MSE on the validation split

error = ...

# YOUR CODE HERE

raise NotImplementedError ()

validation_errors.append(error)

return np.mean(validation_errors)

In [ ]:

# TEST

avg = compute_CV_error(LinearRegression(), X_train[[‘TAX’, ‘INDUS’, ‘CRIM’]],Y_t rain)

assert 55 < avg < 65

Question 5 : Selection of Features

We have defined four collections of features. Each collection contains three features.

For each collection, we use compute_CV_error from Question 4 to determine the average mean square error across 4 fold.

In [ ]:

feature_sets = [[‘CRIM’, ‘ZN’, ‘INDUS’], [‘CHAS’, ‘NOX’, ‘RM’], [‘AGE’, ‘DIS’, ‘RAD’], [‘TAX’, ‘PTRATIO’, ‘LSTAT’]]

errors = [] for features in feature_sets: print(“Trying features:”, features)

model = LinearRegression()

avg = compute_CV_error(model, X_train[features],Y_train)

print(" \t RMSE:", avg)

errors.append(avg)

Which collection of features was most accurate on average? Assign the collection of features to best_feature_set. Indicate the average mean squre error in best_err.

In [ ]:

best_err = … best_feature_set = …

# YOUR CODE HERE raise NotImplementedError ()

In [ ]:

# TEST

assert best_feature_set in feature_sets

Question 6 : Linear Regression

For the sake of comparison to Question 2 where we used linear regression with 12 features, we want to assess the accuracy of the model from Question 5.

Fit a model with the features from Question 5.

Predict housing prices for the training set and testing set.

Compute the mean square error on the training set and testing set

In [ ]:

model = LinearRegression() model.fit(X_train[best_feature_set], Y_train)

train_mse = … test_mse = …

# YOUR CODE HERE raise NotImplementedError ()

In [ ]:

# TEST

assert np.isclose(train_mse, 33.87581897458931, atol=0.01, rtol=0.01)

Here we hae generated a scatter-plot with the residuals. Remember that if points in the residual plot are randomly scattered around the line y = 0, then we know that a linear regression model has good performance.

In [ ]:

residuals = model.predict(X_test[best_feature_set]) – Y_test

plt.axhline(y = 0 , color = “red”, linestyle = “dashed”) plt.xlabel(“Observed Price”) plt.ylabel(“Difference between Predicted Price and Observed Price”) plt.title(“Residuals on Testing Set”) plt.scatter(Y_test, residuals, alpha=0.5);

Nice! You’ve used -fold cross-validation to fit a linear regression model to the housing data.

In the future, you’d probably want to use something like cross_val_predict (http://scikit- learn.org/stable/modules/generated/sklearn.model_selection.cross_val_predict.html) to automatically perform cross-validation, but it’s instructive to do it yourself at least once.